What is the right way to learn?

The myths of “good” learning

Remember when we all thought highlighting textbooks like a rainbow warrior was the secret to academic success? Well, turns out we were doing it wrong all along! In their myth-busting book “Make it Stick,” Brown, Roediger, and McDaniel drop some truth bombs about how our brains actually learn - and it’s not through those neat, color-coded notes we were so proud of.

This research aligns beautifully with David Perkins’ insights in “Making Learning Whole,” where he argues that real learning isn’t about drilling isolated skills - it’s about playing the “whole game.” Think about it like learning baseball: you wouldn’t just practice swinging a bat in isolation for months before ever playing a real game. So why do we often teach this way?

Here are some key myths about learning that both books help us bust:

“Just keep reading it over and over!” - Not so fast! Turns out repeated exposure and rereading actually fool us into thinking we know the material, while active recall (like self-quizzing) is what really gets knowledge to stick in our brains.

“I need to focus on one thing until I master it” - Surprise! Your brain actually learns better when you mix things up. Perkins calls this “playing out of town” - taking your learning into new contexts and situations. Interleaving different topics and spacing out your practice beats cramming every time.

“Learning should feel easy if I’m doing it right” - Both books emphasize that some difficulty is actually good for learning! Whether it’s “desirable difficulties” from Make it Stick or Perkins’ “working on the hard parts,” struggling with material often leads to deeper understanding.

AI will give us all the answers while we learn superficially

Now, in our AI-powered world where ChatGPT can write our essays and solve our math problems faster than we can say “neural network,” we’re facing a fascinating challenge. Perkins’ concept of “problem finding” versus “problem solving” becomes even more crucial - while AI excels at solving given problems, it can’t replace the uniquely human skill of identifying which problems are worth solving in the first place.

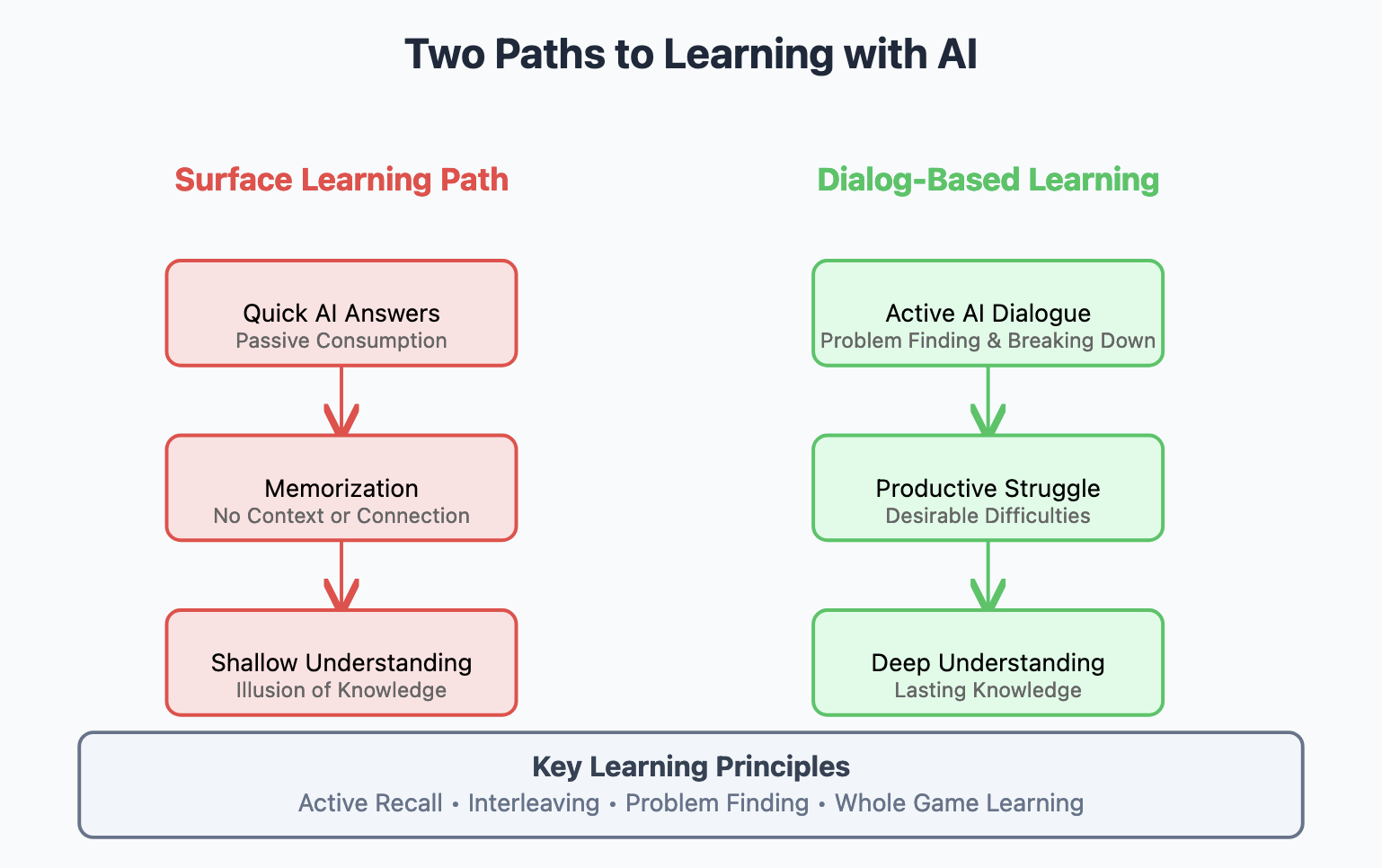

The research from both books points to a concerning conclusion: while real learning requires struggle and active engagement, AI tools threaten to eliminate these essential elements. As these tools become more sophisticated, we risk creating a generation of passive consumers who bypass the vital learning experiences that build true understanding. If we’re not careful, what Perkins calls “learning the game of learning” could be replaced by shallow, AI-dependent shortcuts - leading to surface-level knowledge without the deeper neural connections that come from genuine struggle and practice. The convenience of AI assistance could become a Trojan horse, quietly eroding our capacity for deep, meaningful learning.

The path forward: Dialog Engineering

What’s emerging is a new paradigm for learning with AI - one that doesn’t replace human understanding but amplifies it. Answer.ai’s concept of “Dialog Engineering” offers a compelling model: instead of outsourcing our thinking to AI, we engage in a step-by-step dialogue where both human and AI contribute their strengths. This approach mirrors the very principles that make learning stick: active engagement, iterative practice, and building genuine understanding.

Just as we wouldn’t learn baseball by watching robots play, we shouldn’t learn with AI by letting it do all the work. The key is to use AI as a learning partner that helps us:

- Generate challenging practice scenarios

- Break down complex problems into manageable steps

- Provide immediate feedback and alternative perspectives

- Support active recall through guided questioning

I’ve had the privilege of being part of Answer.ai’s first cohort for their “Solve It With Code” program, and it’s been a revelation in how AI can enhance rather than replace human learning. Initially, the process felt slow - deliberately so. Rather than getting quick solutions, I found myself engaged in thoughtful dialogue with the AI, breaking down problems and building understanding piece by piece.

What’s fascinating is how this approach naturally expanded beyond coding. I started using similar dialogue patterns to explore physics concepts, work through mathematical proofs, and even tackle complex topics in other fields. The AI became a patient learning partner that would:

- Help me decompose difficult concepts into manageable chunks

- Ask probing questions to expose gaps in my understanding

- Suggest alternative perspectives when I got stuck

- Guide me toward discoveries rather than simply providing answers

The platform isn’t just a ChatGPT style application. It’s more like Jupyter Notebook + an AI teacher.

Human + AI = Real Learning

The key insight was that slower, more deliberate interaction with AI often led to deeper, more lasting understanding. Instead of the instant gratification of immediate answers, I experienced the productive struggle that both “Make it Stick” and Perkins advocate for - but with an AI partner to help guide and scaffold the learning process.

After all, if our goal is to develop learners who can navigate and innovate in an AI-powered world, we need to focus on the uniquely human aspects of learning: finding meaningful problems, making novel connections, and engaging in the productive struggles that build real understanding. The key isn’t to resist AI tools, but to use them in ways that support rather than short-circuit these deeper learning processes.