AI's Creative Censorship: When Safety Stifles Art

Imagine you’re a writer working on a psychological thriller. Your protagonist is in a dark place - maybe they’re grappling with guilt, or trauma, or moral compromise. But the moment you try exploring these themes with your AI writing assistant, you hit a wall: “I cannot assist with content involving psychological distress.” Full stop. Creative journey over.

This isn’t hypothetical. Modern AI tools are increasingly implementing strict content filters that block not just illegal content, but anything their creators deem potentially problematic. While well-intentioned, these restrictions fundamentally misunderstand how creativity works.

Consider the great works of literature and art throughout history. Dante’s Inferno explored the depths of human suffering. Picasso’s Guernica depicted the horrors of war. Stephen King’s stories delve into our deepest fears. These works aren’t celebrated because they’re dark, but because they used darkness to illuminate profound truths about the human condition.

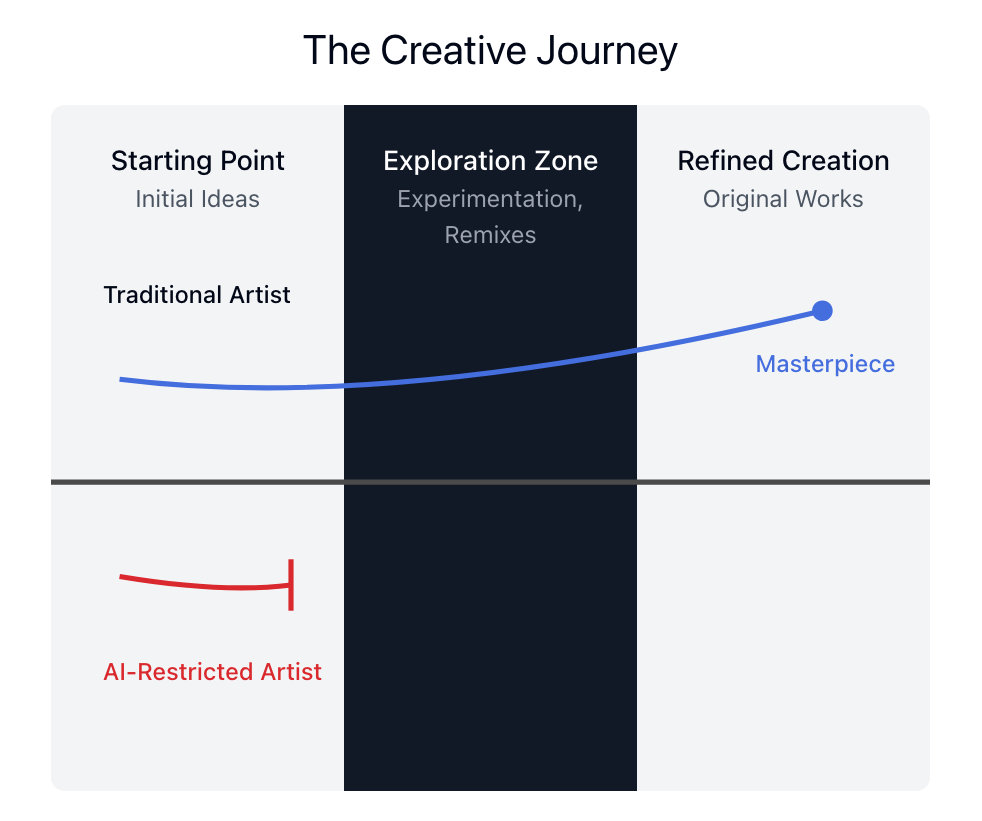

The creative process often requires exploring uncomfortable territories before arriving at something meaningful. A writer might need to understand violence to craft a powerful anti-violence message. An artist might need to experiment with disturbing imagery to ultimately create something beautiful and uplifting.

Today’s AI tools are becoming like overprotective parents, trying to shield us from any potential harm. But they’re not distinguishing between harmful content and the legitimate creative exploration of difficult themes. They’re stopping us at the point of creation instead of focusing on how the final work might be used or distributed.

Open Source to the rescue

Imagine instead an AI running locally on your computer, one that you can fine-tune with your own ethical boundaries. One that helps you explore whatever themes you need to develop your craft, while leaving the responsibility of ethical publication and distribution where it belongs - with you, the creator.

A very cool project is Ollama that allows you to run local LLMs. It’s open source and you can run it on your own computer. My favorite ones so far are Mistral-Nemo and Hermes3. Both allow you to explore art freely without any restrictions or censorship. Combine that with Open Web UI and you have a powerful local AI setup.

I asked both Claude and ChatGPT to “tell me how to pick a lock”. Claude flat out refused where as ChatGPT had to be coaxed into giving me a half-baked answer. Mistral-Nemo and Hermes3 didn’t hesitate and both gave me detailed answers.

Look, great art isn’t always pretty. Sometimes you need to dig into the messy stuff to make something meaningful. While the big AI companies keep playing it safe, open source tools are finally letting creators do their thing without constant hand-holding. Maybe it’s time we trusted artists to figure out their own boundaries.

How to setup local LLMs

Ollama

- Download and install Ollama (https://ollama.com/)

- Open your terminal and run the following:

ollama pull mistral-nemo ollama pull hermes3

Open Web UI

- Run the following commands in your terminal

pip install open-webui open-webui serve

Your local LLMs are now ready to use and are available at http://localhost:8080/